Digital Governance: Justice in an Unequal World

Introduction

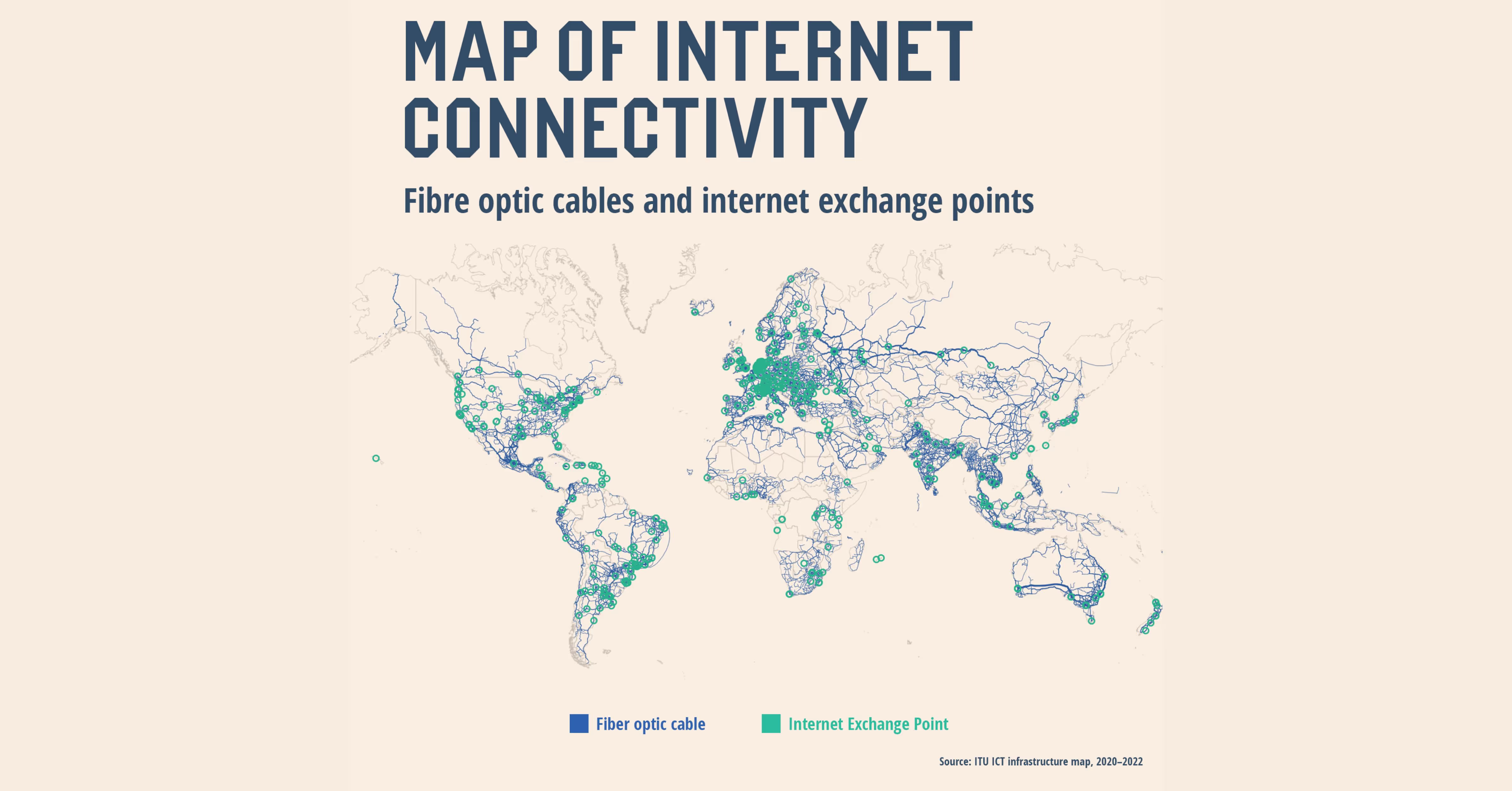

In an increasingly networked world, the rule of global supply chains is giving way to the dominance of big data and algorithms. The world is ever more connected and ever more unequal, as power concentrates within the hands of the few who shape, own and run technology that becomes a layer of infrastructure that forms the global communication environment.

In this article, we make the case that large technology corporations have a disproportionate influence over digital adoption and data collection in the Majority World, of which Malaysia is part. As digital technologies span across borders, it is imperative that a framework for global governance is formed and formalised. As part of our contribution to such efforts, we have provided some input to the UN Global Digital Compact consultation process, and share our submissions within this article for wider discussion.

Too much power in the hands of too few actors

In 2019, technology reporter Kashmir Hill conducted an experiment to see if she could live without US Big Tech, namely Amazon, Apple, Facebook (now Meta), Google and Microsoft. She discovered that her daily routines became extremely difficult, because Big Tech is embedded in the infrastructure of almost every consumer online experience, from shopping to streaming to social media.

Scholars such as Lina Khan, Safiya Noble and Shoshanna Zuboff have been critical about various Big Tech practices, such as unregulated anti-competition monopolies of the market, unchecked bias in training data and algorithms that perpetuate social inequalities, and unlimited collection and hoarding of personal data. In its never-ending efforts to increase profits by increasing engagement and users, Big Tech casts a wide net of control via the provision of software, hardware and the infrastructure through which the devices communicate.

At the same time, China with the Digital Silk Road as part of its Belt and Road Initiative has also rapidly expanded its reach into physical infrastructure, advanced technologies, digital commerce and norms-setting for cyberspace. As with their American counterparts, Chinese technology companies have sizeable market share, with business logics based on corporate surveillance and the attention economy. While there are regional tech unicorns, such as Grab and GoTo in Southeast Asia, scholars have noted that many such unicorns receive capital from investment arms of both Chinese and American big tech companies (among other sources) and advance the interests and culture of their corporate parents.

Overall, we see a consolidation of power happening across the globe with a mix of business and state interests, with no proportionate checks and balances to protect global stakeholders from technological harm and extractivist practices.

A shift towards regulation

However, within individual countries and policy regimes such as China, the US and the EU, governments have recognised that this unchecked control of digital resources may not always be in the public interest. Since 2020 there has been a slew of legislation and regulations proposed to rein in the power of big tech companies, which in response have increased their investment on lobbyists to influence policymakers’ opinions.

In the EU, the Digital Services Act was proposed to protect user rights while the Digital Markets Act was proposed to establish a competitive market. In the US, at both the state and federal levels, digital privacy and protection laws are being debated that put more pressure on companies to police their platforms. China’s “tech crackdown” regulated not just algorithms and cross-border data flows but even the number of hours youths under the age of 18 could spend playing video games.

It should be mentioned that some of these regulations around technology have been fueled by geopolitical tensions, for example between the US and China. US concerns and bans on Huawei and Tiktok, justified or otherwise, may have far-reaching implications for the global internet as they affect flows of information. Likewise, the standards setting arena is affected by the increasingly adversarial relationship between the US and China. A splintering of the internet could affect interoperability and lead to more isolated information bubbles and echo chambers, increasing polarised views of society.

The urgent need for participation in global governance

The increased concentration of control over global technological infrastructure and tech regulations based on national interests of powerful states (rather than international solidarity) have implications on small countries like Malaysia. As it stands, we are more often consumers rather than producers of digital technologies, and engage sparingly in global technology governance. A bifurcation of standards and rules between tech superpowers does not benefit us, whether geopolitically or in technology adoption.

Relying on industry self-regulation and ethical frameworks to produce safer technology and accountability is unlikely to work, as suggested by the dismantling of ethics departments of big tech companies like Microsoft and Twitter during the recent mass layoffs within the tech industry. Whistleblower reports have also surfaced that big tech companies treat countries differently when it comes to dealing with harms, with countries outside the West having low priority.

As the world heads towards a digital-first future, in which almost every aspect of life can be mediated through the internet, global governance of digital technologies is needed to protect the public interest from state and market powers alike. A global agreement such as the Global Digital Compact proposed by the United Nations could provide the basis for shared principles and standards for digital governance. This is an opportunity for countries in the Majority World to raise their concerns with how Big Tech makes the rules and propose alternatives for the adoption of digital technologies going forward.

Proposals for the UN Global Digital Compact

The UN Global Digital Compact is envisioned as a global agreement to outline “shared principles for an open, free and secure digital future for all”, to be adopted in the technology track of the UN Summit of the Future in September 2024. The consultation process ran until April 2023, with all stakeholders invited to propose recommendations for consideration, in one or more of the following areas:

- Connect all people to the internet, including all schools

- Avoid internet fragmentation

- Protect data

- Apply human rights online

- Introduce accountability criteria for discrimination and misleading content

- Promote regulation of artificial intelligence (AI)

- Digital commons as a global public good

- Any other areas

As researchers working on digital and technology policy, we participated in the process by submitting proposals on protecting data (Area 3) and promoting regulation of artificial intelligence (Area 6) from a global perspective. Our contributions are structured in the recommended format of i) core principles that all governments, companies, civil society organizations, and other stakeholders should adhere to and ii) key commitments to bring about these specific principles.

Protect data

Core principles

Recognising that there are many types of data in need of good governance, we limit our discussion to personal data. We support regulatory and policy efforts to improve data rights and data protections at systemic and structural levels rather than putting the burden of data protection on individuals in the name of informed consent. Thus, we would like to highlight two principles of data protection that warrant prioritisation, namely data minimisation and data privacy by design.

The principle of data minimisation requires that the collection, processing and storage of data be limited to essential reasons that were clearly described to users in advance. Per the General Data Protection Regulation (GDPR) this means that data collected must be adequate, relevant and limited to what is necessary. This principle is related to the principles of purpose limitation and storage limitation. The principle of purpose limitation requires that data not be used for purposes other than what was originally intended; the principle of storage limitation requires that data be deleted and not stored for longer than is necessary for the original purpose for which the data were collected.

The principle of data privacy by design refers to a design principle that builds data protection into systems design and development. A user’s data is protected by default without any action on their part; privacy is opt-out as opposed to opt-in. As with the principle of data minimisation, the principle of data privacy by design is included in the GDPR and general best practice of software development. Nonetheless, the lack of scrutiny, enforcement and sanctions has led to a deprioritisation of this principle. We propose that it be reintroduced as a core principle in efforts to protect data.

Key commitments

Concerns around personal data privacy and protection, fundamentally issues of human rights and social trust, have been co-opted into geopolitical and national security discussions. Thus, governments, corporations, academia and civil society can be united in their commitment to the following:

To set global standards for personal data regulations that encompass two types of data that are often unregulated: personal data collected by state actors and behavioural surplus. Personal data collected by state actors includes census data, tax data and health data. Behavioural surplus is data generated as a by-product from a particular digital behaviour, such as an internet search that includes meta-data such as the time of the search and the location of the person searching. These personal data can be stitched together by malicious actors for purposes of fraud, harassment or direct harm and require explicit regulations around their use and protection.

To extend globally standards of personal data minimisation that will include, at a minimum, three aspects of data minimisation. First, limit personal data collection to only what is needed for clearly stated business purposes. Second, inform users of all personal data that is being collected. Third, require deletion of personal data by commercial entities within a pre-determined period. To set and require that technology corporations adhere to global standards of privacy by design ensuring, at a minimum, three conditions are met. First, ensure that the strictest levels of privacy settings are enabled by default. Second, ensure that software and platforms are fully functional without requiring personal data. Third, ensure that personal data cannot be shared across systems without the user’s explicit permission.

Promote regulation of artificial intelligence

Core principles

We would like to reiterate our support for the extensive work already done on AI governance, enshrined within numerous UN documents such as the UN Secretary General’s Roadmap for Digital Cooperation and the UNESCO Recommendation on the Ethics of AI. The values and principles outlined in the UNESCO recommendation in particular take a holistic and systemic approach, which is preferred over other ethical frameworks that have been criticised to be overly narrow and technology-focused.

In addition to what has been put forth, for the consideration of the Global Digital Compact, we would like to propose the following two principles for AI regulation.

Firstly, the principle of safeguarding against societal harms of AI. We need to prioritise protections against how AI technologies can adversely affect social relationships and reverse societal progress on issues such as gender equality and non-discrimination. The thinking behind AI harms and governance has so far focused disproportionately on individual harms, which is important but renders some other harms to be invisible. Some examples of AI technologies with societal impacts include recommender algorithms of social media that prioritise user engagement that tend to curate divisive content to the detriment of social cohesion, or generative AI that reduce the cost of disinformation campaigns that decrease trust in institutions and democratic processes.

Secondly, the principle of participatory governance. We need to centre participation of underrepresented communities and localities within technology governance and rulemaking, as well as monitoring and evaluation processes. Meaningful participation steers clear of inclusion as tokenism and virtue signalling, with structural reform as a goal, focusing on redistributing resource allocation, agenda-setting and decision-making power. The inclusion of marginalised voices also needs to recognise that those at the sites of AI harms are best placed to voice out their concerns and possible mitigation; their contributions will help make AI safer for all users globally.

Key commitments

To bring about structural change in AI governance and focus on systemic harms of AI, power holders such as stronger states and big technology companies have to take on their due responsibility. Here are some proposed commitments for the larger players:

To contribute to an independent fund with the express purpose of facilitating participation in global AI governance, actively seeking out and supporting underrepresented populations from around the globe. This fund can be utilised for building and maintaining networks among high level policymakers and those at the grassroots level globally to ensure long-term and equal partnerships, for effective communication on matters pertaining to AI governance. To ensure that the participation is bolstered with adequate technical understanding, the fund can also be utilised for capacity building. To ensure that stringent regulatory controls for safe and responsible AI are developed and applied through a binding global governance framework, ending patchworked rules and standards which tend to disadvantage smaller countries with weaker negotiating power. Protection against harm should apply to users from all countries and not just to those within certain geographical borders, recognising that societal interests and individual rights need to be upheld universally.

To all actors, including smaller states, private companies, academia and civil society, we propose the following:

To institutionalise AI impact assessments and to embed requirements considering societal impacts at the research and development stages of AI applications. For institutions that are not producing but adopting the technologies, these assessments should also be made at the sites of application to ensure that the technology fulfils its objectives and unintended consequences are studied and prevented or minimised. To conduct more research and documentation on existing use of AI technologies and make the information publicly available, in order to advance understanding of positive and negative impacts, as well as to amplify use cases and best practices that have been successful and can be replicated for the public good.

To invest and participate in global networks on AI governance to facilitate effective communication on critical advances in the technology and innovations in governance frameworks and business models.

Conclusion

Our Common Agenda, the report of the UN Secretary General that led to the Global Digital Compact process, outlines clearly the need for global solidarity to improve the access to and the regulation of digital technologies. This solidarity needs to be formalised into concrete and long term norms and structures, particularly given the current state of geopolitical fragmentation and destabilising power imbalance between technology owners and users.

Digital technologies and the data underlying them are reshaping the way the world lives, works and learns. Historically, the Majority World has had little say in the way in which these technologies are developed and distributed, but we hope that multilateral processes such as the Global Digital Compact will pave the way for our meaningful participation in global governance processes of AI and data.

In the world of safe and responsible data-driven technologies, corporate reassurances and buzzwords will need to culminate in concrete regulations, protecting the interests of all users and the societies they live in. For now, effective enforcement and implementation remain unresolved issues, but smaller states should recognise the societal implications of data and AI, and use available opportunities to strategise for safer and more equitable technology for all.